Use Cases

Test Data

From the same configuration file generate dummy data, or anonymize production data– for example: GDPR compliance, or business data protection– before providing it to dev teams and contractors.

QVD to Hadoop Workflows

High performance conversion of Qlik QVD files to Avro, then provide data to Hadoop Impala or Hive tables.

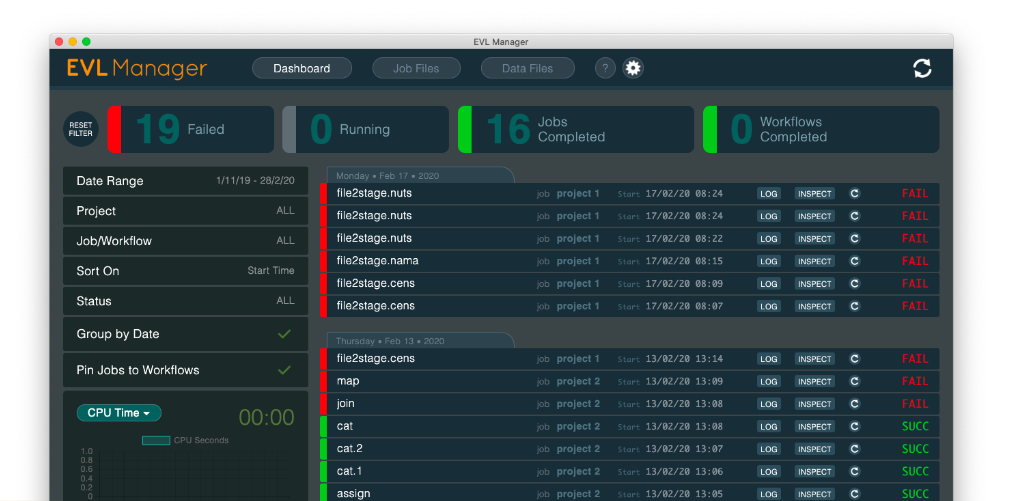

Extract Load Transform (ELT) Workflows

Get data from various sources–like Oracle, Teradata, Kafka or CSV files–and provide a historized base stage in Parquet files for further processing by Spark, Impala or Hive. All metadata driven, based on configuration files; orchestrated and managed with a GUI interface.

Cleanse Roaming TAP files

High performance decoding and cleansing of ASN.1 coded files; separate invalid records/files and provide JSON output for subsequent processing.